Are MongoDB’s ACID Transactions Ready for High-Performance Applications?

Web app developers initially adopted MongoDB for its ability to model data as “schemaless” JSON documents. This was a welcome relief to many who were previously bitten by the rigid structure and schema constraints of relational databases. However, two critical concerns that have been a thorn on MongoDB’s side over the years are that of data durability and ACID transactions. MongoDB has been taking incremental steps to solve these issues leading to the recent 4.0 release with multi-document transaction support. In this post, we review the details of this journey and highlight the areas where MongoDB falls short in the context of transactional, high performance (low latency and high throughput) apps.

MongoDB Release History

1.0 Release (2009)

As highlighted in the 1.0 release announcement, MongoDB did not start out as a distributed database with automatic sharding and replication on day 1. The original design was only suitable for single master, master/slave and replica pair environments. This approach was very similar to the single node MySQL/PostgreSQL RDBMS that were popular at that time. The focus of the database instead was to provide a JavaScript-y data store for web developers.

2.0 Release (2011)

By the time 2.0 release rolled along, MongoDB had added the notion of Replica Sets (a 3-node auto-failover mechanism that replaced the 2-node Replica Pair) and Sharded Clusters (each shard modeled as a Replica Set and multiple Replica Sets coordinated using a separate Config Server). However, multiple reports of MongoDB losing data had surfaced by then. This post from Nov 2011 led to significant consternation in the MongoDB developer community. The original design intents of giving app developers control when to get writes acknowledged (with default writeConcern of 0), no journaling to win fast write benchmarks as well as global concurrency control with collection-level locks had all backfired. As this HackerNews response from MongoDB shows, developers were pushing MongoDB beyond simple single-node JavaScript apps into the world of mission-critical apps that required linear scalability and high availability without compromising durability and performance.

Even though it had designed its replication architecture for the Available and Partition-tolerant (AP) end of the CAP Theorem spectrum, MongoDB continued to market itself as a CP database leading to confused users and unhappy customers. As quoted below, Jepsen analyses from 2013 (v2.4.3) and 2015 (v2.6.7) highlighted the flaws in such claims by noting the exact cases where data consistency is lost.

Mongo’s consistency model is broken by design: not only can “strictly consistent” reads see stale versions of documents, but they can also return garbage data from writes that never should have occurred. The former is (as far as I know) a new result which runs contrary to all of Mongo’s consistency documentation. The latter has been a documented issue in Mongo for some time. We’ll also touch on a result from the previous Jepsen post: almost all write concern levels allow data loss.

3.0 Release (2015)

MongoDB traced much of its initial durability and performance problems to its underlying MMAPv1 storage engine. MMAPv1 is a BTree-based engine that cannot scale to multiple CPU cores, suffers from poor concurrency (uses collection-level locks) and also does not support compression for reducing disk consumption. Net result was that MMAPv1 showed extremely poor performance for write-heavy workloads. To mitigate these issues, MongoDB acquired WiredTiger in December 2014 and made it an optional storage engine starting the 3.0 release (March 2015). WiredTiger replaced MMAPv1 as the default engine starting 3.2 (Dec 2015). However, replication continued to use the v0 protocol where data loss remained a big concern for users especially in multi-datacenter deployments. Jepsen analysis notes the following:

Protocol version 0 remains popular with users of arbiters, especially for three-datacenter deployments where one datacenter serves only as a tiebreaker. Specifically, when two DCs are partitioned, but an arbiter can see both, v1 allows the arbiter to flip-flop between voting for primaries in both datacenters, where v0 suppresses that flapping behavior. In both protocol versions, in order to preserve write availability in both datacenters, users cannot choose majority write concern. This means that when inter-DC partitions resolve, successful writes from one datacenter can be thrown away.

3.4 Release (2016)

MongoDB updated its default replication protocol from an inherently-unsafe v0 to a safer v1 in 3.2 release followed by major bug fixes in 3.4. Even though actual data replication remained asynchronous (where secondaries pull from the primary in a Replica Set), the Replica Set primary election was now determined using the Raft distributed consensus protocol. The result is that the write unavailability time became shorter than before when the primary gets lost or partitioned away for any reason since the secondaries can now auto-elect a new primary using Raft.

In addition to the above replication protocol changes, MongoDB started addressing dirty reads (incorrect data being read) in 3.2 using the majority read concern and stale reads (latest data not being read) in 3.4 using the linearizable read concern. These changes allowed MongoDB 3.4 to pass Jepsen testing. However, as we will see in the next few sections, the hidden cost developers pay for doing linearizable reads on MongoDB is poor performance i.e. high latency and low throughput.

4.0 Release (2018)

After evangelizing for more than a decade on the benefits of fully-denormalized document data modeling that obviates the need for multi-document ACID transactions, MongoDB finally added single-shard ACID transaction support in the recently released 4.0 version. These single-shard transactions enforce ACID guarantees on updates across multiple documents as long as all the documents are present in the same shard (i.e. stored on the single primary of a Replica Set). True multi-shard transactions where developers are abstracted from the document-to-shard mapping are not yet supported. This means MongoDB Sharded Cluster deployments cannot leverage the multi-document transactions feature yet.

How Single-Shard Transactions Work in MongoDB?

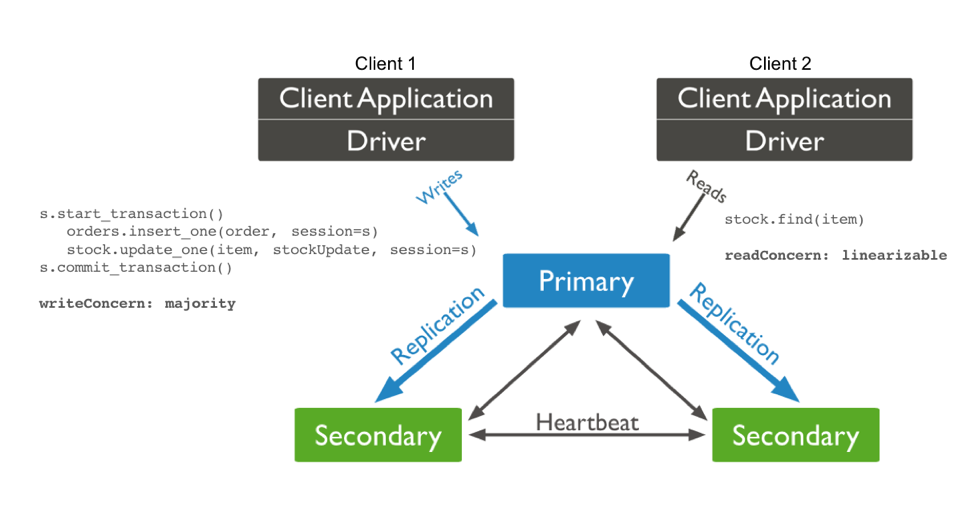

As we observed in the previous section, MongoDB’s path to transactions has been a decade long with many twists and turns. Given its single-write-node origins, that this path (even in the context of a single shard) has necessitated the replacement of both its storage engine and its replication protocol should not come as a surprise. Let’s review how single-shard transactions actually work in MongoDB 4.0. The figure below shows ECommerce app that has 2 MongoDB clients – Client 1 performs the transaction and Client 2 reads a record updated by the transaction of Client 1.

MongoDB 4.0 Transactions in Action

Client 1 is inserting a new order document into the Orders collection while also updating the stock of the ordered item (stored as a different document in a different collection) assuming the order ships successfully. Clearly these two operations have to done in a ACID compliant manner, hence the use of start_transaction and commit_transaction statements before and after. As documented, the only writeConcern thats suitable for high data durability is that of majority. This means a majority of replicas (2 in this example) should commit the changes before the primary acknowledges the success of the write to the client. The transaction will remain blocked till at least 1 of the 2 secondaries pulls the update from the primary using asynchronous replication which is susceptible to unpredictable replication lag especially under heavy load. This makes MongoDB’s single-shard transactional writes slower than those observed in other transactional NoSQLs such as YugabyteDB which uses Raft-based synchronous replication even for data replication (and not simply for leader election).

Let’s assume that before the primary acknowledges the transaction commit to Client 1, Client 2 tries to read the stock of the same item. As we have highlighted previously in Overcoming MongoDB Sharding and Replication Limitations with YugabyteDB, the only way to perform a strongly consistent read in MongoDB is to use the linearizable read concern (and not the majority read concern). Even though transactional writes support Snapshot Isolation where a previous snapshot of data can be served from the primary, non-transactional writes (those done without start_transaction and commit_transaction) follow no such guarantee. Linearizable reads ensure that readers get the truly committed data irrespective of whether a transactional or non-transactional write was used to update the data. Following is the specific quote from MongoDB documentation.

Unlike “majority”, “linearizable” read concern confirms with secondary members that the read operation is reading from a primary that is capable of confirming writes with { w: “majority” } write concern. As such, reads with linearizable read concern may be significantly slower than reads with “majority” or “local” read concerns.

Consulting other replicas for serving strongly consistent reads is the second and more significant source of slowdown in MongoDB in the context of high-performance transactional apps. The latency gets worse in geo-distributed deployments where each node may be in a different region altogether. Other transactional NoSQLs such as YugabyteDB do not suffer from this problem. YugabyteDB relies on Raft-based synchronous data replication where the follower replicas are synchronously updated by the leader replica. This means YugabyteDB can serve a strongly consistent read straight off the leader of a shard because it is guaranteed to hold the most current value clients are allowed to see.

Impact on Secondary Indexes

Keeping a secondary index consistent with the primary key updates usually requires multi-document transactions where the primary key is updated in document1 and the secondary index metadata is updated in document2. MongoDB does not support Global Secondary Indexes where all the secondary index metadata is stored globally but rather supports Local Secondary Indexes where secondary index can be only local to the shard (i.e. document1 and document are in the same Replica Set). Note that this local index does not remain consistent as writes to the primary key are undertaken. Local indexes have to be built manually in a rolling manner as often needed. Special cases such as unique indexes require all new writes to be stopped till the indexing completes.

With the introduction of single-shard transactions in 4.0, it is natural to expect MongoDB to deprecate its current out-of-band indexing approach in favor of a new approach that results in completely-online, strongly consistent local indexes. However, the indexing approach remains unchanged in the 4.0 release.

Hidden Cost of MongoDB Transactions

MongoDB adding single-shard transactions is a welcome change from the past years when it was either having durability issues or de-emphasizing the need for ACID transactions altogether. However, by keeping the original sharding and data replication architecture intact, it has diminished the applicability of its transactions to high-performance apps with strict latency and throughput needs. An application using MongoDB 4.0 single-shard transactions has to deal with the following problems.

Lack of Horizontal Scalability

No transactions support in MongoDB Sharded Cluster means that converting the 3-node Replica Set to a fully sharded system that can provide horizontal write scalability is not even an option. For many fast-growing online services this is a killer problem since they cannot fit the write volume on the single primary node.

High Latency

As described in the previous section, ensuring fast and durable writes while simultaneously serving fast and strongly consistent reads leads to MongoDB suffering from high latency on both the write and read paths. App developers are now forced to add a separate in-memory cache such as Redis to decrease the read latency. But now they have deal with the possibility of data becoming inconsistent between the cache and the database. If the data volume is high enough and the data being queried cannot fit into the in-memory store cleanly, then data that is still being accessed will get evicted out of the cache more often. This will in turn lead to most reads hitting the database which means the original low latency goal for the cache is no longer satisfied. At the same time, the data tier has become 2x operationally complex.

Low Throughput

A Replica Set has a single primary node to serve the writes. This means that all the write requests even when they are for unrelated documents have to go through the primary. The secondary members can potentially serve reads and hence help maintain a good read throughput but are completely un-utilized in the context of writes. For write-heavy workloads this leads to big wastage of compute and storage resources since 2 nodes out of a 3-node replica set are not contributing their fair share. More contention at the primary node means lower throughput for the overall cluster.

Summary

Even though it has improved its transactional capabilities over the last few years, MongoDB is still architecturally inferior to modern transactional databases such as YugabyteDB. Using transactions in MongoDB today essentially means giving up on high performance and horizontal scalability. At YugaByte, we believe this is a compromise fast-growing online services should not be forced to make. As shown in the table above, we architected YugabyteDB to simultaneously deliver transactional guarantees, high performance and linear scalability. A 3-node YugabyteDB cluster supports both single-shard and multi-shard transactions and seamlessly scales out on-demand (in single region as well as across multiple regions) to increase write throughput without compromising low latency reads.

What’s Next?

- Review key limitations of MongoDB sharding and replication architecture.

- Compare YugabyteDB to databases like Amazon DynamoDB, Apache Cassandra, MongoDB and Azure Cosmos DB.

- Get started with YugabyteDB on a local cluster.

- Contact us to learn more about licensing, pricing or to schedule a technical overview.