My Time as a Yugabyte Software Engineering Intern

February – A Unique Introduction

It was late February, and I had spent the past several weeks learning everything I could about the distributed database ecosystem. As an intern on the Investment Team at 8VC, I had gotten the chance to work on technical due diligence under Partner & CTO Bhaskar Ghosh, taking a multitude of pitches every week and constantly shifting contexts. One of those companies speaking with us was Yugabyte, and I was soon consumed by the world of NewSQL – battling architectures (Spanner v. Calvin), language variants (Postgres v. MySQL), and cloud providers. Thanks to Bhaskar sharing his wealth of operational knowledge and experience in the space, I found myself having just enough high-level knowledge to understand the conversations we were having with co-founders Karthik and Kannan. It quickly became clear to me that they are world-class engineers and entrepreneurs, as well as some of the few people with the background and grit to make a company in this space succeed. Part of that belief came from the stories I heard about their time at Oracle or leading HBase at Facebook, but their real strengths and passion came across in our conversations about competitive landscape, go-to-market strategy, and bottoms-up developer evangelism

Over the next couple weeks, many long write-ups were penned on the future of open source databases, growing OLTP budgets, and the value of transactional consistency at scale. Even all water-cooler conversation began to center around Yugabyte. My other work fell into the backdrop as we burnt the midnight oil to make the partnership happen, and by the time the process had come to a close, I had decided that I wanted to spend some time hands-on engineering at Yugabyte.

April – Interviewing at Yugabyte

I hadn’t written much code during my time at 8VC so I spent some time freshening up on the work I’d done preparing for interviews eight months prior. I was initially concerned about database implementation related questions (as my limited knowledge in the space was purely self-taught) but those worries were soon proven to be unfounded as the interviews covered your typical data structures and algorithms.

To me, this was a great opportunity to get to know two of the engineering leaders at Yugabyte and get a better understanding of the organizational structure of the engineering team. I soon learned that the structure of the engineering organization mirrored that of Yugabyte’s architecture, with the additional flexibility of being able to do work across layers.

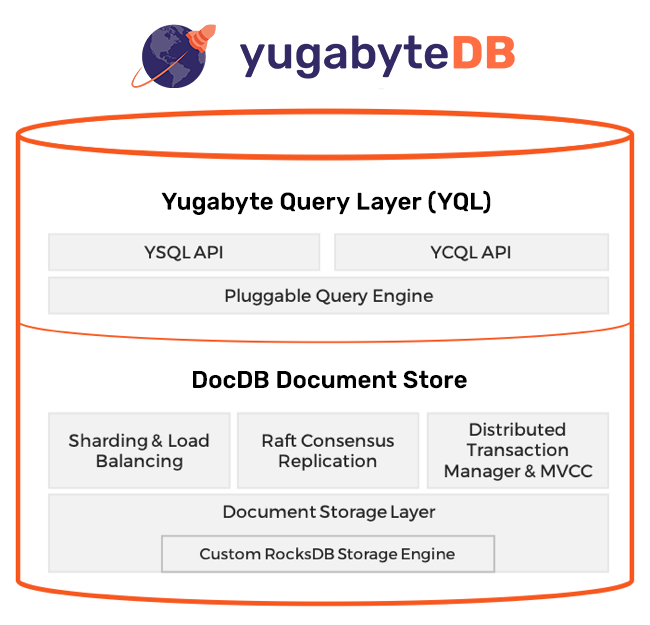

My first interviewer, Bogdan, leads the DocDB team at Yugabyte. For those who may be less familiar, DocDB is the core data storage layer that is responsible for ensuring transactional consistency, sharding tables, guaranteeing high performance, and replicating data. It is a distributed key-value store that heavily modifies and extends the popular RocksDB storage engine out of Facebook. This conversation reminded me of many of the reasons why I was initially so intrigued by what Yugabyte had built – qualities such as auto-scaling of deployments and ensuring ACID transactions at scale. These are difficult guarantees in a traditional leader-follower setting, let alone a distributed one.

My second interview was with Neha, who leads the YQL team at Yugabyte which is responsible for everything query layer related – including planning, pushdowns and other optimizations, and execution. Yugabyte has been able to adopt the entire top-half of PostgreSQL into their codebase, and that’s part of what makes it so interesting. Not only do they get Postgres compatibility “out of the box,” but they also have a pluggable query layer with YCQL (Cassandra-compatible), YSQL (Postgres-compatible), and YEDIS (REDIS-compatible – not in active development). This allows Yugabyte to inject into multiple different workloads and markets and attract users from both the SQL and NoSQL worlds – another core strength that I had identified a few months before.

Outside of the core, fully open source database, there is surplus of other work to be done. Yugabyte has a team responsible for both the self-managed DBaaS platform (Yugabyte Platform) and the fully-managed solution (Yugabyte Cloud, currently in Beta). This touched on another important secular movement – as enterprises move to the cloud, it’s vital that software vendors stay cloud-agnostic and support a variety of deployments, including those that are hybrid and/or multi-cloud. It was cool to see Yugabyte help prevent cloud vendor lock-in and open themselves up to a wide potential customer-base by supporting deployments on GCP, Azure, and AWS. In my time at Yugabyte, I felt that it was important to learn about Kubernetes deployments and deployments in the public clouds, even if I didn’t get the chance to touch any code in the space.

May – Getting Started & Adapting to a Remote Internship

After officially wrapping up my time at 8VC, I moved over to Yugabyte in late May at what happened to be a very exciting time. They had just announced the hiring of their new CEO, Bill Cook, an enterprise software veteran – bringing with him the experience of leading Pivotal Software and Greenplum. Furthermore, they would announce the $30M Series B, led by 8VC, within my first couple weeks at the company. This saw a wave of inbound attention in the community Slack and the broader database developer community, which further cemented my confidence in the value of what the team was building (as if it needed to be!).

By virtue of my time at 8VC, I had the fortune of starting my internship with a “strong prior”. I found that I’d have picked up that knowledge quite quickly regardless – the team was super welcoming and many members such as Bogdan and Neha scheduled regular 1:1’s early on in my internship to help me acclimate. Had my internship been in-person, a whiteboarding session or two may have been the desired medium to explain important concepts like the Spanner architecture or Raft consensus, but in a remote environment due to current health and safety protocols, Slack quickly became a constant source of information and prompt answers to my questions. Not to mention I could always rely on the docs for more info. Additionally, through regular design review meetings, I was able to learn about existing core implementations such as the Transactional I/O Path, as well as new features like automatic tablet splitting.

By the end of my first couple days, I had gotten my development environment set up on a work machine, built a YugabyteDB implementation from source, began to experiment with its features, and started to work on my first code change.

June – Navigating the Codebase

Although not my first technical internship, this was my first time navigating a complex C/C++ codebase and working at a company of Yugabyte’s size. Bogdan got me started on a couple of simple tasks which also served as a good introduction to Yugabyte’s CI/CD and unit testing suite. I soon realized that I could not treat development the same way I would with a data structures or systems class – I instead had to be strategic about compilation and building, as a clean build takes a significant amount of time. For revisioning and code review, Yugabyte uses Phabricator + Arcanist and Git for version control, which again was a new process for me. However, nearing the end of my first couple weeks, I fell into a good workflow for development, building, and testing.

July – My Major Project

Once I became comfortable with developing at Yugabyte, I transitioned to my core intern project and was assigned an intern manager, Nicolas, to supervise the rest of my internship.

I spent the majority of my summer working on a project that we aptly labeled “Tablegroups” – giving the user the ability to define groups of tables whose data will be stored together on the same node(s). This comes as a very natural extension to Yugabyte’s existing implementation of colocated tables. Jason, an engineer on the core database, had previously put in a tremendous amount of work to make colocation possible in Yugabyte. I was able to use much of his work as the foundation to build Tablegroups on.

Ordinarily, in an OLTP setting, a database has many tables, most of which are very small in data size. In a relational model, these tables are commonly queried together and joined together. What if you could ensure that the data for those tables lived together on the same nodes? This becomes more valuable the larger a cluster gets; even in the simplest setting where you have a single tablet per table, you will frequently need to join across nodes or even regions and incur costs over the network. This is the fundamental problem that colocation solves. In the status quo implementation, you can create a database as follows: CREATE DATABASE dbcolo COLOCATED=true; This creates a single “parent” table and tablet called the colocation tablet (that is replicated according to your cluster’s replication factor). Any future tables or indexes in that database will be added to the colocation tablet as “child” tables unless they opt-out of colocation (which you may want to do if you expect that table to grow large and require sharding).

As you can probably guess, a Tablegroup is functionally a parent table with a single colocation tablet for future relations in that Tablegroup to be added to.

My work was split into a few major components:

- Creating the design for the Tablegroups feature, formalizing it into a design doc, and presenting it in a bi-weekly (twice a week, not fortnightly!) design review. I then iterated on the design as needed and incorporated valuable feedback from other members of the Yugabyte team.

- Implementing the query layer (YSQL) portion of Tablegroups. This included changes to the grammar, adding a new Postgres system catalog, building RBAC for Tablegroups, and other crucial usability features.

- Handling the storage (DocDB) implementation. Ensuring that the master has the proper metadata about Tablegroups as well as ensuring that tables are added to the proper tablet.

After spending a couple weeks on design I had come up with the following rough outline:

- I first needed to extend the grammar, while leaving room for future enhancements (such as co-partitioning or interleaving – more detail in a future design doc).

- In order for Postgres to be aware of Tablegroups a new table,

pg_tablegroup, needed to be added to the per-database metadata. This was the first time that Yugabyte has added to the Postgres system catalog, so I needed to be extra careful about backwards compatibility as there were unknown-unknowns. I also had to store metadata per-relation about Tablegroup information. - RBAC is important for users, so I had to implement

GRANT/REVOKE/ALTER DEFAULT PRIVILEGESfor tablegroups. - In order to execute

CREATE/DROPstatements with Tablegroups on the storage layer, I needed to add a series of RPCs. However, I could build on top of Jason’s earlier work that enabled adding tables to tablets, creating a table-level tombstone to mark a table as deleted (and delete the data during compactions), and maintain table-level metadata about colocation. - Catalog manager will need to maintain in-memory maps of information related to Tablegroups and their corresponding tablets. These maps will need to be reloaded based on persistent tablet info on restarts.

I then dove into the query layer implementation. Here, I relied on Mihnea (another core database engineer) and Jason for code reviews and to help point out any scenarios for testing that I may not have considered. We soon encountered a roadblock where my initial diff was backwards incompatible – meaning that in the event of an existing cluster upgrade, usability would completely break due to pg_tablegroup lookups failing. This was resolved by (1) using a PG global to that is set in postinit to signal whether the physical pg_tablegroup catalog exists and (2) adding a new reloption in pg_class to store the Tablegroup OID (if any) instead of a new column. The longer term fix is to enable a better upgrade path for initdb (which is in the works!).

On the DocDB side, I’ve been able to get the whole CREATE / DROP TABLEGROUP flow working from end-to-end, as well as DDL/DML for relations within the Tablegroup. There’s still a couple outstanding issues, but that’s what the GitHub issue queue is for! The biggest thing here was making sure to test for many scenarios, such as writing low-level tests to ensure that the tables are being added to the proper tablets, enhancing pg_regress tests for DML operations, and testing that state is preserved upon restart, amongst others.

July (cont.) – Tablegroups & Tablespaces

You might be wondering how users might be able to leverage Tablegroups and where the future is headed? Here’s some small insight into that.

Somewhat orthogonal to the idea of Tablegroups is that of using Postgres Tablespaces to enable row-level geo-partitioning. Traditionally, Tablespaces are used to control the locations in a file system where database objects are stored. However, in a distributed environment, and a cloud native one, this level of control of storage on-disk makes little sense. It’s only natural to repurpose this feature to represent its equivalent in a distributed setting.

To concretize this idea, let’s say you have a table with a column that represents location. Your deployment is in three different geos: {Americas, Europe, Asia}. Based on the location column, you want to define the geo in which each row of data is stored. This is extremely valuable in use cases such as GDPR and tiering data based on usage. Tablespaces could be used to achieve this – giving the user the ability to map a partition to a physical region.

The vision with Tablegroups is that it will eventually be combined with row-level geo-partitioning to create a unique combination of features in the distributed SQL world. If you had multiple tables with the above geo-partitioning scheme, you could place the tables together in a Tablegroup per-geo!

More information about the internals of this feature as well as its future can be found in an upcoming blog post! This feature is still in beta but will continue to be worked on as part of the product roadmap. There’s work yet to be done (i.e. being able to alter the Tablegroup of a table, better load balancing for colocated tables, automatically pulling fast-growing tables out of Tablegroups …) – so if you want to contribute, please join the community Slack and ask away!

August – Conclusion

When reflecting on what I expected coming into the internship, I find myself even more excited about what Yugabyte is building for the cloud native world. I see Yugabyte’s products as a huge enabler of digital transformation, especially for any enterprise that requires transactional consistency at scale.

Through this end-to-end experience I got the opportunity to implement a large feature in a complex codebase as well as learn how to scope work and prioritize features in a V1, and with Nicolas’s help I was able to stay on track and effectively manage my project. Beyond the technical work, I learned a lot from him about making the most out of a career in tech, especially from hearing about his past life at Facebook and the rest of his career in the Valley.

As far as technical work goes, I’ve become increasingly passionate about distributed systems & databases, and will be digging into some problems surrounding database encryption on my own time these next few months. I’ve thoroughly enjoyed all that I’ve learned since May, even if the team has been distributed.

If anything I’ve written interests you, please feel free to reach out on LinkedIn or over email at vivek.gopalan@yale.edu. Would love to chat with y’all about Yugabyte and maybe inspire another contributor or two (or more!) along the way.