Yugabyte Database Engineering Update – August 20, 2018

Time for another update from the engineering team for the YugabyteDB database! Let’s dive in and see all the progress we’ve made.

Time for another update from the engineering team for the YugabyteDB database! Let’s dive in and see all the progress we’ve made.

As we reviewed in “Docker, Kubernetes and the Rise of Cloud Native Databases”, Kubernetes has benefited from rapid adoption to become the de-facto choice for container orchestration. This has happened in a short span of only 4 years since Google open sourced the project in 2014. YugabyteDB’s automated sharding and strongly consistent replication architecture lends itself extremely well to containerized deployments powered by Kubernetes orchestration. In this post we’ll look at the various components involved in getting YugabyteDB up and running as Kubernetes StatefulSets.

…

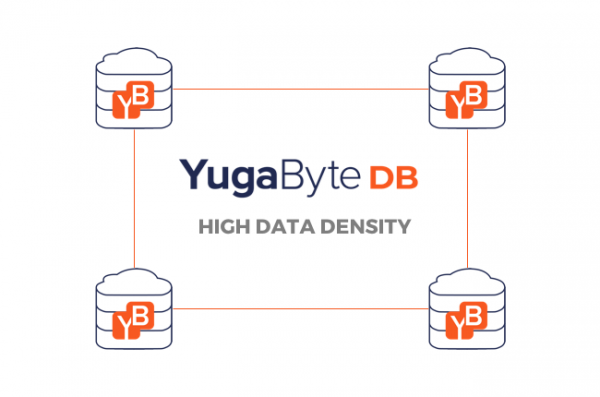

For ever-growing data workloads such as time series metrics and IoT sensor events, running a highly dense database cluster where each node stores terabytes of data makes perfect sense from a cost efficiency standpoint. If we are spinning up new data nodes only to get more storage-per-node, then there is a significant wastage of expensive compute resources. However, running multi-terabyte data nodes with Apache Cassandra as well as other Cassandra-compatible databases (such as DataStax Enterprise) is not an option.

…

Apache Cassandra is a distributed open source database that can be referred to as a “NoSQL database” or a “wide column store.” Cassandra was originally developed at Facebook to power its “Inbox” feature and was released as an open source project in 2008. Cassandra is designed to handle “big data” workloads by distributing data, reads and writes (eventually) across multiple nodes with no single point of failure.

…

Editor’s note: This post was originally published August 8, 2018 and has been updated as of May 28, 2020.

As we saw in ”How Does Consensus-Based Replication Work in Distributed Databases?”, Raft has become the consensus replication algorithm of choice when it comes to building resilient, strongly consistent systems. YugabyteDB uses Raft for both leader election and data replication. Instead of having a single Raft group for the entire dataset in the cluster,

…

Explore how consensus-based replication gets implemented in distributed databases, and dive into Paxos and Raft, the most commonly used leader-based consensus protocols

YugabyteDB database has consistent, high-performance secondary indexes—built on top of distributed ACID transactions—to help retrieve data.

After billions of dollars in capital expenditure and reference customers in every major vertical, Google Cloud Platform has finally emerged as a credible competitor to Amazon Web Services and Microsoft Azure when it comes to enterprise-ready cloud infrastructure. While Google Cloud’s compute and storage offerings are easier to understand, making sense of its various managed database offerings is not for the faint-hearted. This post introduces app developers to the major Google Cloud database services,

…

In this blog, we dive deeper into Percolator and Spanner as well as the open source databases (YugabyteDB for example) that they have inspired

First-generation NoSQL databases dropped ACID guarantees with the rationale that such guarantees are needed only by old-school enterprises running monolithic, relational applications in a single private data center. And the premise was that modern distributed apps should instead focus on linear database scalability along with low latency, mostly-accurate, single-key-only operations on shared-nothing storage (e.g. those provided by the public clouds).

Application developers who blindly accept the above reasoning are not serving their organizations well.

…